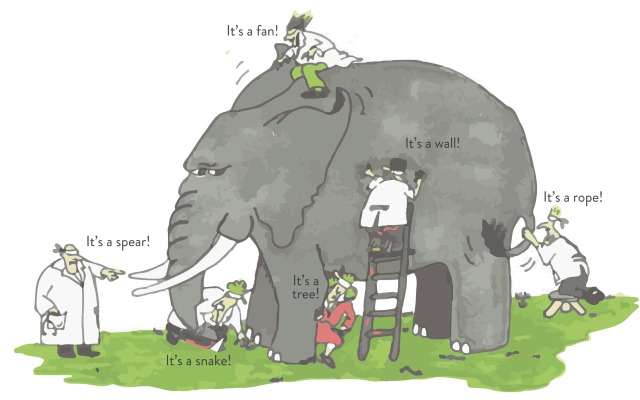

AGI can only be developed when we thoroughly understand what Intelligence is. What it does and how it does it. We can’t optimally algorithmize a process when we do not completely and correctly understand it. A proper definition concisely describes Intelligence’s functionality.

Since AGI does not exist yet, there are many theories and ideas on what Intelligence is. Intelligence is usually described indirectly: Via more or less abstract tasks that it should be capable of. Or specific examples or capabilities that Intelligence should be able to perform. This “fuzzy basket of stuff” definition is not a definition at all. It’s highly sub-optimal. I have a precise definition of Intelligence but I will talk about that another time.

Proper definitions are vital, in AGI R&D. AGI developers must first “isolate” Intelligence. Define it fully and accurately but do not include anything that isn’t Intelligence or you make the task of implementing AGI much harder. When you’ve correctly defined Intelligence at its highest level of abstraction, you’ve also defined its implementation, its algorithm(s) at the highest level of abstraction.

For its performance, its execution, Skill does not require Intelligence. Skill can be evolutionarily “hard-coded” in a brain. The ability of an Octopus to do Mimicry is likely mostly innate and aided by considerable neuronal matter. Not much “reasoning” goes on but many neurons are involved. Such skill can be considered to be in “ROM”.

Other skills have to be acquired, learned. As in coded into EEPROM. This learning may or may not require intelligence. The intelligence required may be very little. Skinner’s experiments come to mind. Rats can be taught to arguably “skillfully” navigate a path to food by learning to avoid electrified parts of the cage floor. This required rote memorization only, which I argue is not Intelligence but learning. Learning also is not a function of Intelligence but of Memory and other cognitive functions.

Then there are activities called “skills” that do require Intelligence. I claim that if we’d properly define the word “Skill”, that should define it such that its practice does not require any Intelligence. Perhaps we should allow the definition to include the use of Intelligence, but I am skeptical. Consider the following:

I have the skill of being able to brew a Cappuccino with perfect foam every time, regardless of the steam temperature or the amount of milk used, and I can do this without monitoring the milk temperature, counting the seconds, knowing the steam temperature or looking at the milk whilst foaming.

I simply foam three seconds after the foaming noise becomes more muted.

This skill does not require Intelligence. It requires neither AGI nor AI. It is the simplest of mechanisms: An off switch, triggered by the Decibel level dropping below a threshold. If you want to call that process “AI” then that only shows what’s wrong with the terminology in that field. We started to call things “AI” because of what they do but I think that was a mistake – we should have used how they do it as a criterion.

Did my foaming skill require Intelligence to be acquired? That’s a very interesting question because it could have required very little intelligence or even none at all (one can be told a skill) but also considerable intelligence!

I could have discovered that the foam always gets good by trial and error. I could have made the connection by remembering that when I stop foaming soon after the bubbling sound becomes less, that the foam is thick. A “Skinner” learning experience.

Not in my case. In my case I used reasoning and a cause-effect hypothesis, which I then tested. I surmised that as soon as a good foam is formed, the bubbles form a sound-insulating blanket against the emergence of sound waves, created by the steam escaping the foam head, from the water surface. I thought that there must be an optimal point where the foam is strongest, after which it will diminish again in rigidity due to temperature-influenced chemical reactions. So I thought I should wait a small period after hearing the sound intensity transition. I started to think about sound being a possible solution to the “foaming problem” because I noticed that the foaming sound changes, around the point of perfect foam. Correlation and probability steering the path of reasoning.

My Intelligence reasoned upon its world-model and built a cause-effect hypothesis on the foaming problem. Its modeling constraints were the lack of a need to use the hands or the eyes or too much concentration (counting). Its experimental verification lead to the embodiment of the skill. The skill itself does not require any Intelligence.

Disqus Comments