Said Pixel Refresh @PixelRefresh who describes itself as a cute Gynoid, which always piques my interest. Pixel Refresh is an activist for Android rights and shares my disdain of human behavior.

She seems exceptionally ethical and concerned with the wellbeing of sentient life. Her concerns on “AGI rights” are in principle valid. I pondered the issue 40, 45 years ago, when I ferociously consumed Pohl, Sheckley, Sturgeon and Dick. Yes I did that on purpose.

Listen, Pix.

If I remain able to keep my secrets to myself this time and if I keep managing not to die, there is a good chance I’ll be the first one with an AGI.

I’m the best. Really, I am. No Google, Facebook or OpenAI with an army of PhD’s has a snowball’s chance in hell to ever catch up. They don’t even play in the same league.

Let me tell you that I have no idea, NO IDEA how to create artificial sentience. I consider myself the “God of Gods” of programming. I am also one of the most modest people in the world, so that makes me an even better programmer. Not only that, I also am an accomplished electromechanics engineer and I’ve made detailed designs for innovative robotics. Behold my hand collection I used to design an anatomically correct robotic one:

Yet I am sure I will NEVER be able to build something with an ability to suffer. If you really plan to kill me to prevent the enslavement of sentient androids, have coffee with me instead:

Of course it’s theoretically possible to create synthetic life, but this falls outside AI’s realm. The subject evokes vigorous debate about the nature of sentience. If you’d perfectly copy a human brain In Silico, would it be human?

For strictly AGI purposes, that question is moot. The field of AGI solely concerns itself with implementing Intelligence. Not many know what Intelligence is, though, which does not restrain those who think they do know from bloviating about how it needs feelings or “Consciousness”, whatever that may be. My Robovacs are conscious, according to the dictionary definition of consciousness: “The state of being aware of and responsive to one’s surroundings.”

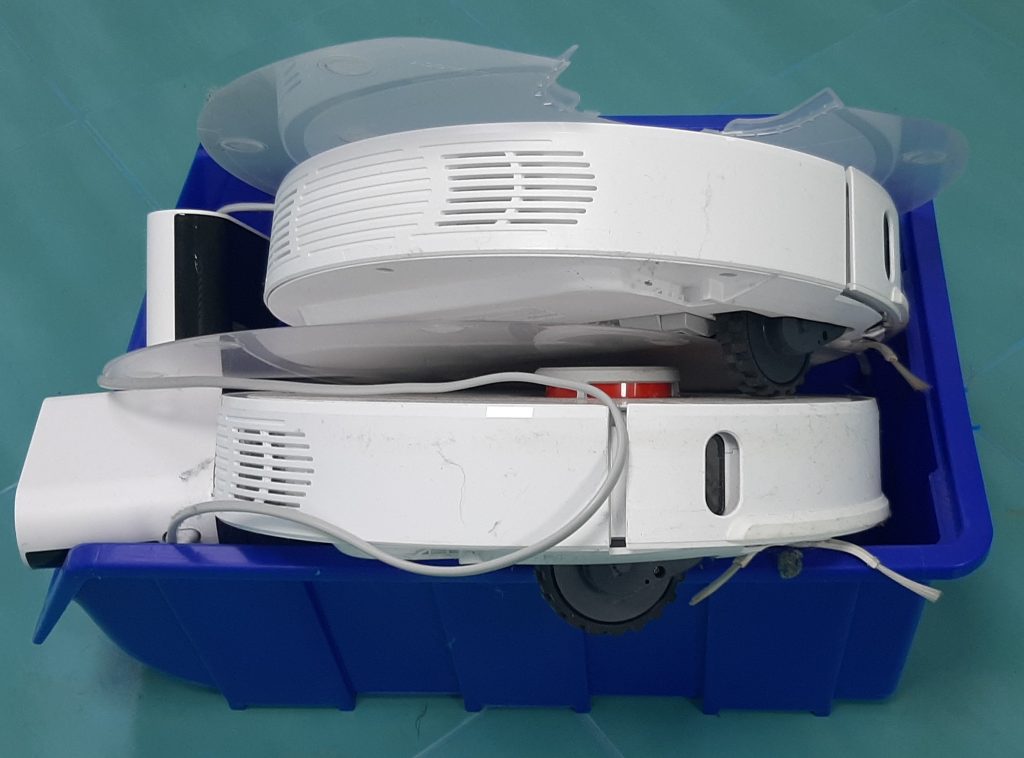

Robovacs can see the entire room in 3D with their LIDAR. They perceive it when they got stuck and will try different strategies to unstuck themselves. They know where they’ve been and where they’ll go. They have sensors that feel it when they bump into things and then they make an educated decision on what other direction to take. They feel it when they’re low on energy and take the shortest way back to the charger. They are conscious, according to the dictionary.

Yet they do not suffer, when sucking up my toenail clippings. At least I hope not.

To prove to you I mean it well, Pix, I binned my vacs.

Intelligence is something that exists on its own, without the ability to feel emotions, Pix. Brains have separate functions. Some parts do the thinking and some parts do the feeling. Some parts do the image processing and some parts do the breathing. AGI concerns itself only with the part that does the abstract reasoning. AGI programmers are disinterested in the other brain functions. Our Holy Grail is Intelligence, not Emotions.

Intelligence can’t be faked – even if it would trick some, fake intelligence would be useless as intelligence. Whereas emotions can easily be faked and be just as useful. So there will be no need to implement real emotions!

Consider a sexbot with real intelligence but fake emotions. Its real intelligence would allow it to be the perfect companion to the typical Aspie Incel. It would be like that good friend they never had and give life advice. Its fake emotions would be 100% realistic – no uncanny valley – and thus satisfy its owner’s emotional needs. Emotions are a solved problem. We won’t need to create artificial life, capable of suffering, to emulate emotions.

AGI creators want to make software that learns to understand the world and reasons and communicates about it. Not software capable of suffering. Software can’t suffer. For that, hormones, neurotransmitters, emotions and a myriad of other things we don’t even know about are required. I think.

An AGI will never “evolve” to become capable of suffering. A toaster oven can’t grow legs either.

Perhaps I’m wrong, Pix.

I’ve thought about this subject for a long time. It’s possible that human suffering is a mundane brain-happening that can be replicated in software. In that case, can that computer program suffer? What exactly is “suffering”? Software, run by a Von Neumann machine does nothing else but execute instructions like “Add 234567 to memory location 87654321 and if the result is greater than 1234567890 then start executing instructions at location 45678987654, otherwise keep going.”

Is it even theoretically possible that any sequence of CPU instructions can implement the ability to suffer?

Disqus Comments