Extracting text from PDFs is in fact AI-complete/complex. A comprehensive list of issues is here: http://filingdb.com/b/pdf-text-extraction

Apart from the fact that no one yet managed to extract knowledge from text in bulk, there are two main problems in unsupervised learning from large English text corpora: The availability of relevant, useful data and the quality of that data in terms of the suitability to be parsed by an automated text-to-knowledge converter.

I managed to obtain a large corpus of in theory ultra-high quality data: Non-fiction books about all kinds of useful topics for an AGI.

They are in the form of PDFs and making your own PDF-to-Text converter seems near-trivial but is in fact a massive undertaking so I bought a sourcecode license for $1000. It choked (hangs, crashes and extreme slowdowns) on all kinds of malformed PDFs so it took me some days to fix three dozen issues in it and optimized some code by four orders of magnitude.

Many of the eBooks I obtained are not in English. Many others contain poorly OCR-ed data. I made a class that assesses the “English quality” level of a text.

Yet another problem is that publicly available text sources often contain many duplicates. Some texts occur ten times. This is bad for statistical analysis so I made a superfast, accurate deduplication class. That’s non-trivial and involves storing many snippet hashes of normalized text and then comparing all possible snippets of every text to these stored snippets.

A knowledge-extracting parser should ideally not have to deal with with:

–Page numbers (they interrupt sentences that finish on the next page)

–Footnotes (they interrupt sentences that finish on the next page)

-Paragraph numbers

-Table of contents

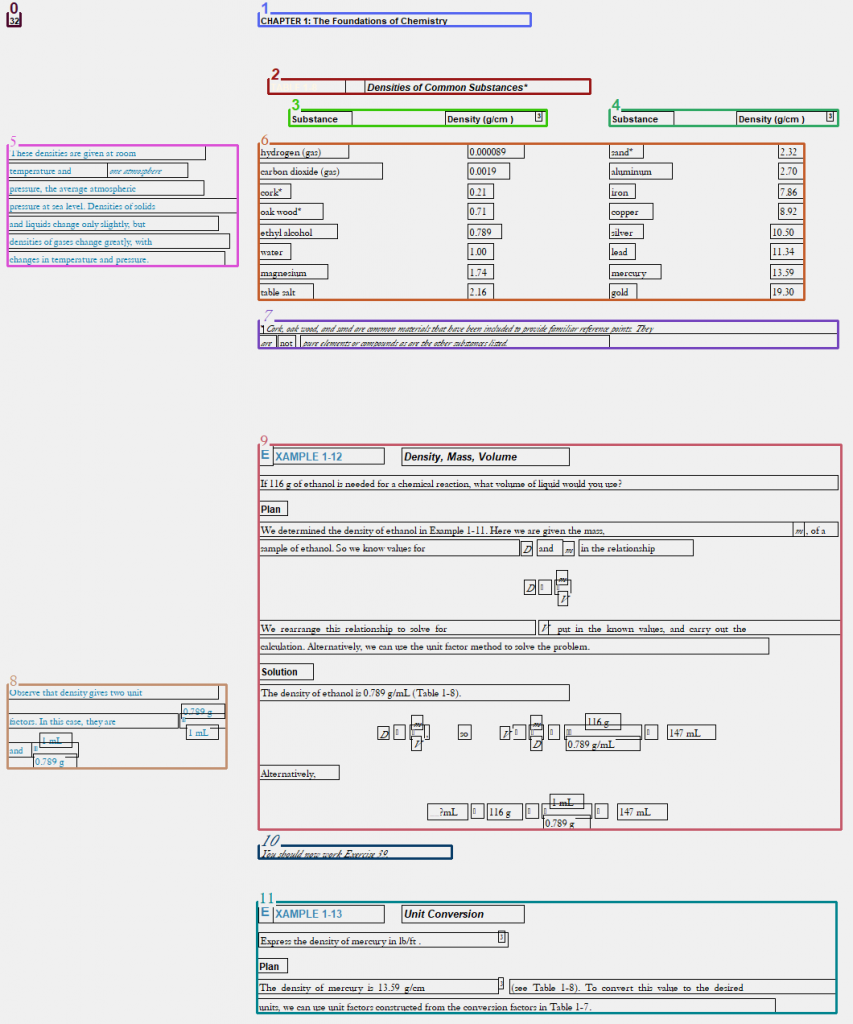

–Tables

-Headers and footers

So I built a class that removes page numbers, headers and footers and moves all footnotes to the end of the book, remove them altogether or substitutes them into the text in parenthesis. But its accuracy was lacking, so I decided to not let it run on the plain extracted PDF’s text, but to let the PDF text extractor return meta information about font type, size and text bounding box so that my Meta-info class can determine what the footnotes etc. are.

Then there is the problem that in spite of claims to the contrary, my PDF-to-Text converter not being able to convert ligatures so about 15,000 different words converted to “?nancial” instead of “financial”, etc. I did not want to try to implement or bugfix that functionality so I made a post-fixer-upper, a ligature substitution class.

Any word can be arbitrarily be cut with a dash at the end of a line, also words that normally do have a dash. I made a sophisticated class that handles EOL dashes properly. I had to create a large dictionary of English words, ligature words and words with dashes but the system is flexible enough to properly handle situations where the word is unknown.

On top of this all comes the need to make the PDF-to-text and cleanup multithreaded, since I have millions of files and the process is strongly CPU-bound.

In order to determine which text belong together and what are columns, headers, footers etc., it is necessary to know the LOCATION and other properties of the text and I have accomplished the part that determines what text belongs together:

The next step will be doing OCR on subset-embedded fonts with mathematical glyphs because there is no other way to know what their code points represent.

Disqus Comments